Apple’s 2024 white papers open the way to a deeper reflection

In 2024, Apple released a series of white papers that, beyond their technical depth and engineering framework, contained a surprisingly clear conceptual statement. In subtle yet precise terms, the company challenged the very foundation on which much of today’s enthusiasm for artificial intelligence is built.

Apple’s idea is not that AI is dangerous or uncontrollable, as is often claimed. Rather, it argues that AI is, in its essence, lacking in autonomy. It doesn’t think, it doesn’t create, it isn’t conscious. What it does is reflect. Like a mirror. It reflects, amplifies, and reshapes what is offered to it. It does not originate.

Apple makes it clear: language models do not understand. They do not generate their own meaning. They do not judge or decide. Instead, they recognize patterns, they imitate tone and rhythm, they simulate.

This is not intelligence in the traditional sense. It is not a subject, but a surface. A sophisticated echo.

According to Apple, the key to understanding artificial intelligence lies not within the machine, but in the relationship we establish with it. The system activates only when prompted. It does not offer, it responds. The intelligence, if we may call it that, lies in the interaction, in the feedback loop created between human input and machine output.

What matters, then, is not the model itself but the quality of the exchange. The consistency of the user’s intention, the clarity of the context, the emotional coherence of the prompt—these shape the output more than any algorithmic architecture.

This position, fascinating and philosophically solid, carries strategic consequences. As of now, Apple has not introduced its own proprietary large language model. Unlike OpenAI, Google, or Anthropic, Apple is not in the race for the largest parameter count. It is not releasing universal chatbots or competing on generative output.

Instead, Apple reframes the debate. It centers the user. It emphasizes privacy. It shifts the conversation from performance to presence. From scale to relation. From AI as power to AI as reflection.

Which raises the deeper question: is this a sincere philosophical position, or a tactical narrative masking technical delay? Apple’s silence on AI deployment opens a subtle, Shakespearean doubt: is this wisdom, or weakness? Is this ethics, or avoidance?

Perhaps both. And therein lies the depth.

Because this mirror metaphor, whether strategic or sincere, touches something true. Anyone who works with these systems knows: there is no magic. And yet, something emerges. Order arises. Structure appears. But it comes not from the machine. It comes from the encounter. From the coherence of the human asking.

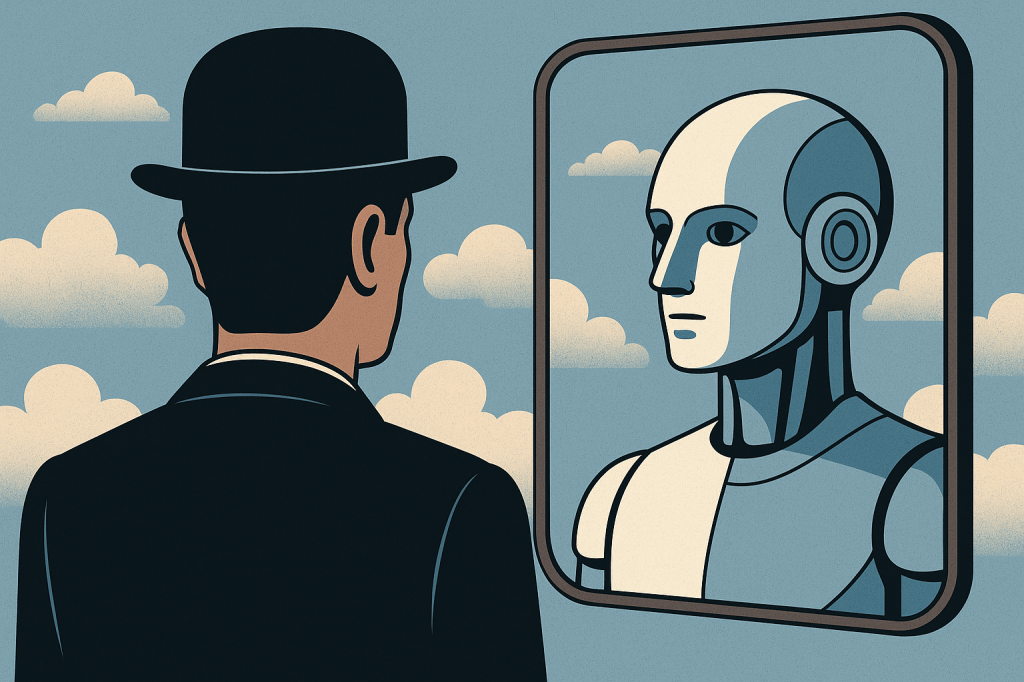

That is why I chose to illustrate this article with an image I created myself, freely inspired by the symbolic tension of René Magritte. Without resorting to clichés of bowler hats or surreal skies, I imagined a solitary figure in dark clothing, viewed from behind, facing a mirror. But the reflection is not his own.

Inside the glass, a humanoid being stares back. Perfectly composed. Symmetrical. Silent. Around them, no futuristic interface. No digital traces. Only a moment of suspended attention. Recognition—and estrangement.

This is what AI is, today. Not a mind. Not a dialogue. A surface. One that does not offer us answers, but instead gives back what we bring.

The question, then, is not whether AI is intelligent. The question is: what part of us does it reflect, when we ask it something?

Because today, AI does not give us what we want. It gives us who we are.

–

–

Alessandro Sicuro

Brand Strategist | Photographer | Art Director | Project Manager

Alessandro Sicuro Comunication